🚀 Knowledge for Your Vector Robot

We implement the best knowledge for your Vector robot so that you can have wonderful conversations with it.

We have tested hundreds of Large Language Models and with Vector robot selected a chosen few that work best with his personality.

Join as a paid subscriber to our newsletter at http://www.learnwitharobot.com and get an API Key for FREE (Daily limit of 15 conversations). Reach out to us if you want more than the daily limit.

📡 API Endpoint

This endpoint accepts OpenAI-compatible chat completion requests.

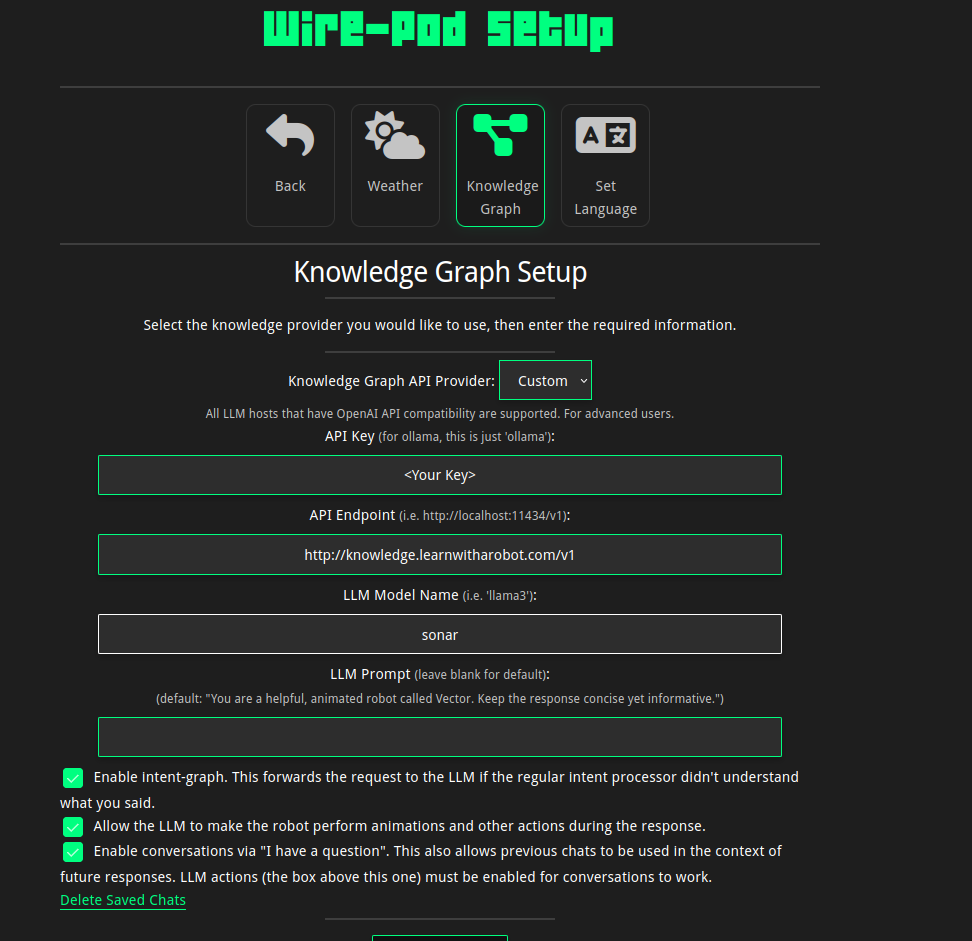

Wirepod configuration

Configure your Wirepod server according to the screenshot below:

Demonstration on a Vector Robot

With our knowledge, you can make your Vector robot answer questions like shown in the video below:

🔧 Sample API Request

Here's how to make an API request. Use this when you need to test our API.

📋 Request Format

The API accepts standard OpenAI-compatible requests with the following structure:

🔐 Authentication

All requests must include a valid Bearer token in the Authorization header:

Tokens can be requested from the editor of www.learnwitharobot.com, Amitabha Banerjee. Use Substack to DM him.

📊 Available Providers and Models

Supported LLM providers and the corresponding models are as follows. If you wish to try an alternate model, please replace the keyword sonar in the screenshot of Wirepod above, with the name of the model (e.g. grok-4-latest) that you want to try. Please note that we don't guarantee the availability of all these models at all times.

- XAI: grok-4-latest, grok-3-latest

- Perplexity: sonar

- Sambanova: DeepSeek-V3-0324

- Parallel AI: speed

- OpenAI: gpt-4.1-mini

🚨 Error Codes

- 401: Invalid or expired authorization token

- 429: Rate limit exceeded

- 400: Invalid request format or unsupported model

- 500: Internal server error